Getting Started with MLflow

MLflow is an open-source project that is designed to manage, track, deploy, and scale machine learning models. In this tutorial, I will walk you through the basic syntax used in MLflow.

Create working env

conda create -n mlflow_env python=3.7

conda activate mlflow_envInstall MLflow

pip install mlflowTo integrate mlflow in the project, we just have to import it.

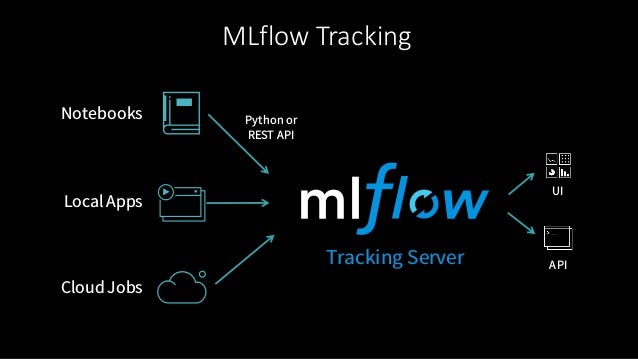

import mlflowMLflow Tracking

Let’s log the experiment results using MLflow Tracking components. Each run documents the following information:

- Parameters: Parameters are variables that you change when tuning your model. You can log key-value parameters of your choice. To log parameters we can use:

mlflow.log_param("lr", 0.001)

### To log dictionary of parameters, we can use

mlflow.log_params()2. Metrics: Metrics are the values that you want to measure as a result of tweaking your parameters. Typical metrics that are tracked can be items like F1 score, RMSE, MAE etc. To track metrics you can use:

mlflow.log_metric("MAE", mae)

mlflow.log_metric("RMSE", rmse)3. Artifacts: Artifacts are any other output items that you want to store. Typically, pickled models , PNGs of graphs, models, lists of feature importance variables are artifacts that we can keep track of. To store artifacts:

mlflow.log_artifact()4. Code Version: It stores all the run as git commit.

5. Source: It also stores the name of the file executed to launch the run.

6. Start and End: Start time and end time of the run is also logged.

Now let’s combine it together to start a new experiment in mlflow.

### To create a new experiment

mlflow.create_experiment()

### Launch a run

with mlflow.start_run():

mlflow.log_param("lr", 0.001)

mlflow.log_metric("MAE", mae)To create a new MLflow run, use with mlflow.start_run: in the Python code. If you do not use with, you have to call mlflow.end_runat the end of the experiment log which will close the MLflow run, whether your code completes or exits with an error.

To setup and run the tracking server

We can log tracking data on the local file system or on a remote server. Here, we will explore different options to save the logs.

- Set storage to a local drive

To inspect the logs, stay in the same directory and run the following

mlflow uiThis will create mlruns folder in your directory where all the logged will be stored. MLflow runs on 5000 port. Then go to the browser and type http://localhost:5000 or http://127.0.0.1:5000.

If you want to save runs to a desired location, you must add the following line in the begging of the program.

mlflow.set_tracking_uri('file:/home/kiran/mlrun')Next to display the data stored in the specific file in the browser, you need to pass the storage location as follow:

mlflow ui --backend-store-uri file:/home/kiran/mlrun \

--default-artifact-root file:/home/kiran/mlrun \

--host 0.0.0.0 --port 5000Tips: --backend-store-uri store everything except artifacts and --default-artifact-root store artifacts only.

2. Set storage to a local server

We will use PostgreSQL database as a backend store. Let’s setup Postgres first.

cd Downloads

sudo apt-get install postgresql postgresql-contrib postgresql-server-dev-all

sudo apt install gcc

pip install psycopg2Create new user.

sudo -u postgres psql

CREATE DATABASE mlflow_db

CREATE USER mlflow_user WITH ENCRYPTED PASSWORD 'mlflow';

GRANT ALL PRIVILEGES ON DATABASE mlflow_db TO mlflow_user;To save runs to a local server, you must add the following line in the begging of the program.

mlflow.set_tracking_uri('http://0.0.0.0:5000')Start tracking server as a local server.

mlflow server --backend-store-uri \

postgresql://mlflow_user:mlflow@localhost/mlflow_db \

--default-artifact-root file:/home/kiran/mlrun \

--host 0.0.0.0 --port 5000To check if it's working properly:

3. Set storage to a remote server

To save runs to a remote server, you must set tracking uri location as the url of the remote hosted server.

mlflow.set_tracking_uri(<url of the hosted server>)Tip: You can also store the log records into s3 bucket. For that you will have to setup AWS credentials, you can follow the documentation to setup aws https://docs.aws.amazon.com/sdk-for-java/v2/developer-guide/setup-credentials.html

Tips:

These are some of the keys that help me while starting to work with MLflow.

To manage your experiments, rather than logging all in the default tab you can create your own experiment and start to work inside it.

mlflow.set_experiment(experiment_name="your_experiment_name")You ran your experiments, exported it into a file but what if you want to extract them into pandas dataframe!

mlflow.search_runs(experiment_ids=["your_expriment_id"])If you want to run many sets of experiments with their own parameters and metrics, then you can use nested = True in mlflow.start_run() as parameter.

Suppose you mistakenly logged incorrect data and you want to correct it you can use mlflow.search_runs() to find the run id and then use:

with mlflow.start_run(run_id="your_run_id") as run:

mlflow.log_metric("MAE", 0.324)You can also look into the mlflow-extend library which provides more functionality. Hope this helps you to get started using MLflow.